|

AssetScan is our new, patent-pending technology, which uses photographic data from remote vehicles and drones, combines this with the power of artificial intelligence and machine vision, and then maps signs of structural deterioration or degradation into digital twins.

|

Introduction

Determining the integrity of large assets can be difficult, expensive, and dangerous - often requiring specialist operatives to access facades via roped access, scaffolding, or by entering confined spaces. Once this data has been collected, it is often not referenced in 3D space. Additionally, assets can only be assessed precisely where operatives have seen and signs of deterioration can be missed.

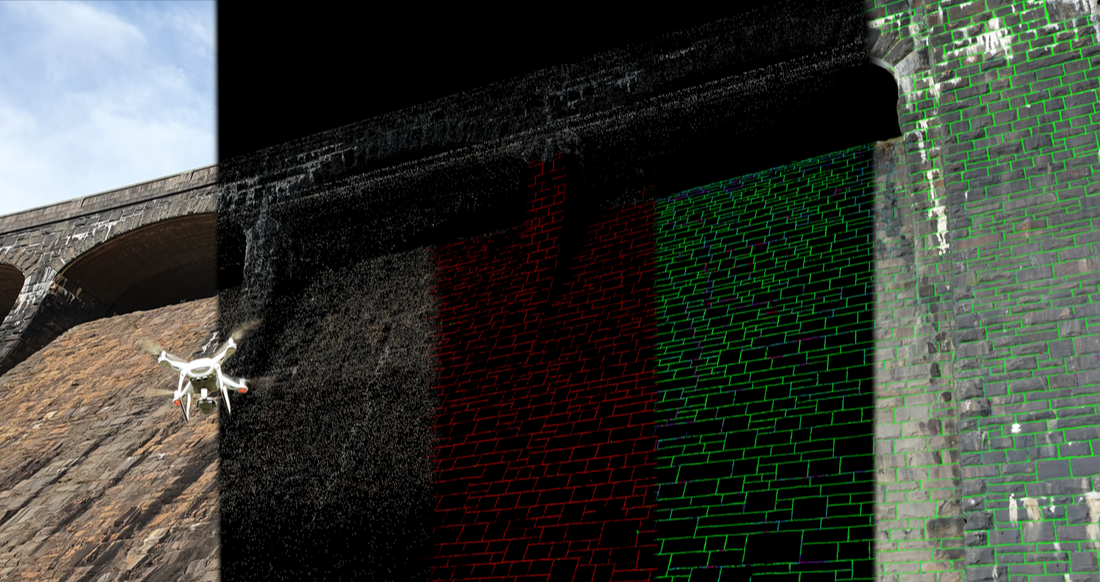

AssetScan is a new, patent pending product instead uses collected images from UAVs, crawlers, roving cameras, or ROVs. These images are then subject to our specially trained machine learning algorithms to highlight features of interest and the condition of these features is then scored to determine the level of deterioration. The images can be used to build a 3D digital twin of the structure, and the machine learning results draped across these. This enables engineers to quickly locate and track deterioration in large assets.

For example, we have recently used AssetScan to look for mortar lines on dressed masonry, and to then score the condition of the mortar to determine the location and amount of works required to repoint the structure. The process was undertaken as follows:

AssetScan is a new, patent pending product instead uses collected images from UAVs, crawlers, roving cameras, or ROVs. These images are then subject to our specially trained machine learning algorithms to highlight features of interest and the condition of these features is then scored to determine the level of deterioration. The images can be used to build a 3D digital twin of the structure, and the machine learning results draped across these. This enables engineers to quickly locate and track deterioration in large assets.

For example, we have recently used AssetScan to look for mortar lines on dressed masonry, and to then score the condition of the mortar to determine the location and amount of works required to repoint the structure. The process was undertaken as follows:

|

1. Drone survey of the structure

|

2. Photogrammetric 3D digital twin production

|

3. Machine vision detects features of interest

|

4. AI scores the condition of these features

|

5. The results are mapped back into the digital twin

|

Materials

The processes developed can be retrained to look for any type of deterioration or degradation in any construction material. This relies on visual cues which can be used for detection. Photographic data of any type of feature or deterioration of interest will be required before AssetScan can be tailored to a specific use case, but once this has been done an 'off-the-shelf' version of the artifical intelligence and machine vision algorithms can be produced.

The following list contain examples of the types of degradation that can be highlighted by AssetScan:

The following list contain examples of the types of degradation that can be highlighted by AssetScan:

|

CONCRETE

PAVEMENTS

|

METALWORK

ROOFING

ENGINEERED FILL/EMBANKMENTS

|

MASONRY

TIMBER

|

Machine Learning

|

Manually identifying continuous fields of information in imagery is a time-consuming, and therefore expensive, task to do manually. For especially large structures, doing so might require several members of staff to undertake such an identification task, leading to consistency issues, and also changing subjective judgement throughout the identification process. AssetScan instead adopts an alternative approach: manually produced training data is instead used to teach Machine Learning algorithms to look for features and defects instead. This improves consistency and significantly reduces the amount of time taken to undertake identification and classification.

AssetScan uses 2 principal processes. The first locates the features of interest. The second classifies selected features against some known conditions. An example using masonry is shown below. |

|

3D Outputs

Model outputs can be displayed in formats which can either be imported to CAD packages and BIM, or simply opened on regular office laptops and desktop equipment. The photogrammetry models produced can be georeferenced using survey markers and be used to produce both meshes and point clouds. The machine learning results can similarly be exported in mesh format, highlighting the features of interest or the condition of those features.

Press PLAY to load at the following 3D models:

Press PLAY to load at the following 3D models:

|

|

|